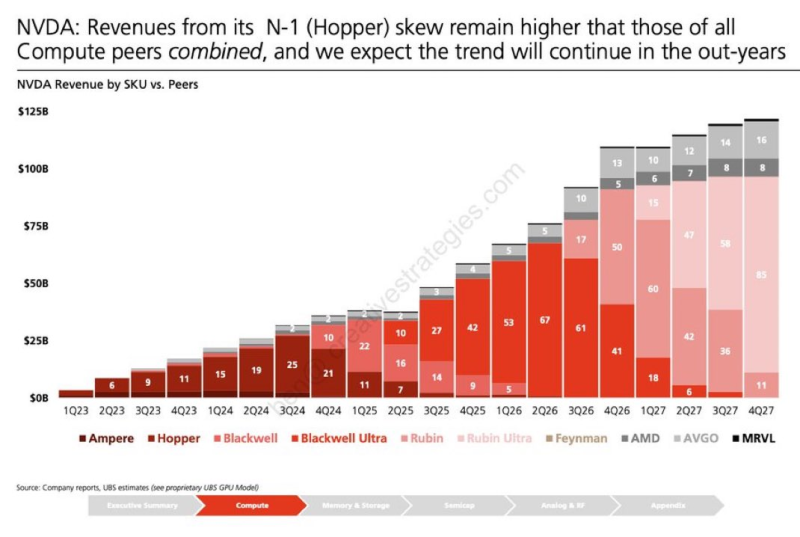

⬤ Nvidia continues to dominate AI compute revenues in a way that's almost hard to believe. Fresh data reveals that Hopper-generation GPUs still bring in more money than all competing platforms combined—and that's before Blackwell and Rubin architectures hit full scale. This underscores just how strong Nvidia's position remains as demand for AI infrastructure keeps climbing across cloud providers and enterprise buyers.

⬤ The revenue breakdown shows Hopper accounting for a massive chunk of compute sales, while newer Blackwell and Blackwell Ultra chips start ramping and Rubin platforms appear on the horizon. When you stack Nvidia's compute revenue against rivals like AMD, Broadcom and Marvell, the gap is striking. Even as the product lineup shifts toward next-gen systems, Hopper remains a cornerstone revenue driver.

Hopper still drives higher compute revenue than all rivals combined, the data confirms.

⬤ What's interesting here is Nvidia's N-1 strategy—keeping previous-generation GPUs in market alongside newer ones. Hopper, once the flagship, continues pulling in more revenue than every competitor's compute platform put together, while Blackwell and Rubin add layers of growth on top. The forecast points to sustained AI infrastructure spending with Nvidia sitting right at the center.

⬤ This matters because Nvidia's revenue dominance shapes the entire semiconductor sector's competitive playbook. Heading into the next upgrade cycle with Hopper alone outearning all peers combined gives the company serious leverage, reinforcing its ecosystem advantage and market influence in AI computing.

Peter Smith

Peter Smith

Peter Smith

Peter Smith