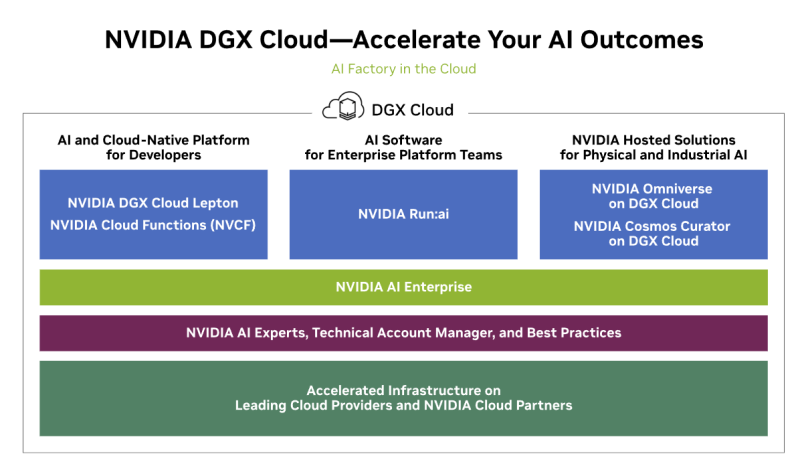

⬤ NVIDIA (NVDA) is getting more attention as people wonder about its future role in AI cloud infrastructure. Discussion is heating up around whether the company could shift from being mainly a chip maker into a full-blown AI cloud software and services player. The DGX Cloud framework positions itself as an "AI Factory in the Cloud," bundling compute power, software tools, and managed services into one vertical stack.

⬤ The DGX Cloud setup focuses on delivering an integrated AI platform rather than just raw infrastructure. Developers get access to DGX Cloud Lepton and NVIDIA Cloud Functions for building and launching AI projects. Enterprise teams can tap into NVIDIA Run:ai for orchestrating compute resources. Everything ties together through NVIDIA AI Enterprise, backed by technical support and account managers—showing this isn't just hardware but a service-heavy approach.

⬤ The platform also runs NVIDIA-hosted solutions for physical and industrial AI applications, including Omniverse and Cosmos Curator. The infrastructure layer runs through major cloud providers and NVIDIA partners, suggesting DGX Cloud isn't trying to replace AWS, Azure, or Google Cloud. Instead, it's an AI-specialized layer sitting on top of existing hyperscale clouds while tightly weaving in NVIDIA's software and hardware.

⬤ This conversation matters because it hints at how NVIDIA's business model might transform as AI workloads grow. Pushing deeper into cloud software, orchestration, and managed services could unlock new revenue streams and boost recurring income tied to the NVIDIA ecosystem. DGX Cloud shows a potential evolution toward higher-margin platform offerings, cementing NVIDIA's position in the AI infrastructure race that's reshaping tech sector competition.

Peter Smith

Peter Smith

Peter Smith

Peter Smith