● Tech analyst Alex Prompter recently spotlighted Google's launch of Coral NPU, calling it a game-changing moment for edge AI. This isn't your typical chip that leans on the cloud for heavy lifting. Instead, Coral NPU brings serious AI horsepower straight to your wrist, ears, or glasses—running continuously while using about as much power as a smartwatch buzz notification.

● The chip cranks out up to 512 GOPS (that's billions of operations every second), which means it can handle transformer models without breaking a sweat. Picture this: AR glasses running Gemini natively, earbuds translating conversations in real time, or smartwatches understanding context—all without phoning home to a data center. You get snappier performance, and your personal data actually stays personal.

● What Alex Prompter described as "architectural surgery" really hits the mark here. Google built this thing from the ground up with AI in mind—matrix engine taking center stage, fully RISC-V architecture, completely open-source, and baked-in hardware privacy safeguards. The best part? It plays nice with TensorFlow, JAX, and PyTorch right out of the gate, so developers don't have to wrestle with compatibility headaches or performance trade-offs.

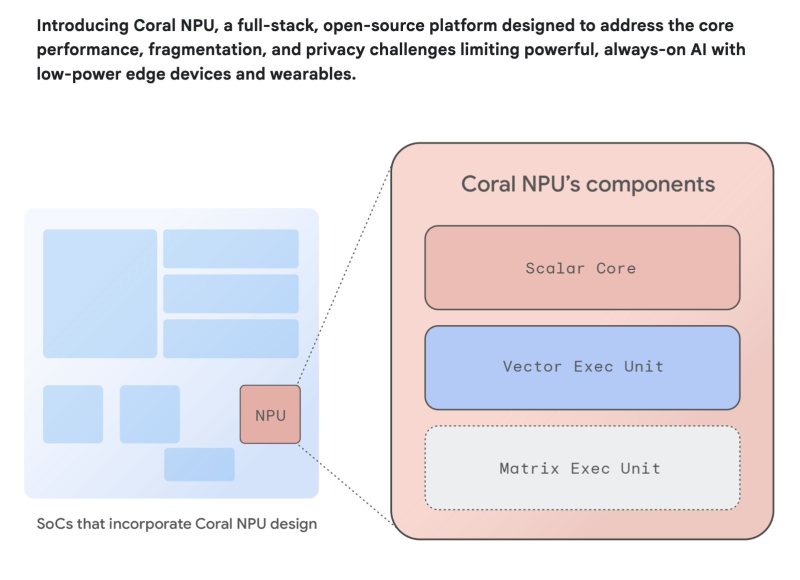

● Google's technical docs break down the Coral NPU into three key pieces: a Scalar Core for general tasks, a Vector Execution Unit for data crunching, and a Matrix Execution Unit for AI's heavy math—all tuned to squeeze maximum performance from minimal power. And this isn't vaporware: Synaptics is already mass-producing chips based on this design, which means real products are hitting the market now.

Peter Smith

Peter Smith

Peter Smith

Peter Smith