Google's AI development is accelerating. Signals from the Gemini website suggest that Nano Banana 2 — internally known as "GEMPIX2" — is nearly ready for release, bringing native visual generation capabilities to Gemini's interface.

A Glimpse Into the Future of Gemini

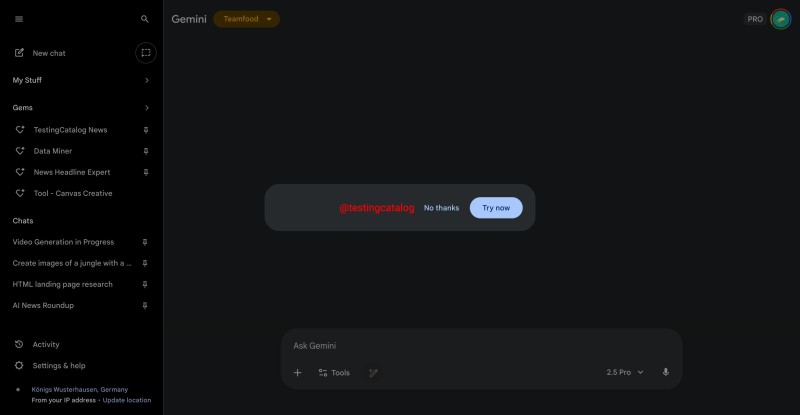

According to TestingCatalog News, a tracker of Google's experimental features, the company is preparing to launch this model within weeks. A Gemini interface screenshot reveals a new "Try now" prompt, confirming Nano Banana 2's integration.

Nano Banana is Google's codename for lightweight multimodal AI models handling text and visuals. The "GEMPIX2" designation suggests this belongs to Gemini's visual generation branch, designed for image synthesis, video previews, and AI-driven visual storytelling.

What Nano Banana 2 Could Bring

While Google hasn't released specifications, Nano Banana 2 is expected to deliver faster image rendering and more responsive multimodal interactions. GEMPIX2 will likely power visual tools across Gemini, NotebookLM, and Workspace, allowing users to generate visuals from prompts, create animated explainers, and enhance conversations with contextual images.

Gemini's Multimodal Expansion

GEMPIX2 fits naturally into Google's current AI trajectory. Recent months saw Gemini evolve beyond language capabilities, adding code reasoning and file analysis. With Nano Banana 2, Google is introducing native visual generation, potentially competing with OpenAI's Sora and Meta's EMU Video. The model could also support NotebookLM's Video Overview feature with its new "Custom Visual Style" setting.

Why This Matters

Nano Banana 2's integration could reshape Google's AI ecosystem. Instead of separate creative tools, Gemini could enable end-to-end multimodal creation where conversations produce research, visuals, and videos. For creators and developers, this means faster workflows merging text, visuals, and sound, plus enhanced productivity tools across Google Workspace.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir