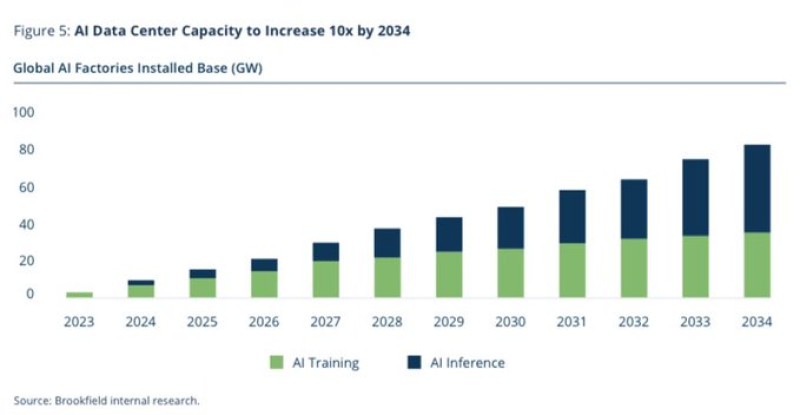

⬤ AI data centers will expand sharply during the next ten years and the details affect chip investors. New forecasts show that total global AI data center capacity will rise to ten times its present size by 2034. A key change is expected in 2032, when inference workloads are forecast to become larger than training workloads. Training holds the major share today - yet that share is set to shrink as the market matures.

⬤ The figures show a plain pattern. Training capacity rises at an even pace through the late 2020s, but inference capacity jumps steeply as 2032 nears. By that year inference is projected to exceed training in installed gigawatt capacity. This shift could alter how firms plan for compute demand. AMD now trades at about fifteen times its projected 2028 earnings. That price appears attractive if the inference surge unfolds as forecast.

⬤ NVIDIA is often linked to chips that target training - the move toward inference raises questions about its future position. As inference expands, the field could become more open besides AMD could widen its presence in data centers. The change in workloads is not a minor technical point - it shows that AI use is moving from model building to large scale real-world service.

⬤ The trend matters because long run compute patterns steer capital budgets and product plans across the semiconductor sector. If inference dominates, firms will have to revise their strategies. AMD could gain a stronger place in a market that diversifies and in which rival chip architectures compete under new rules.

Peter Smith

Peter Smith

Peter Smith

Peter Smith