Artificial intelligence isn't just transforming how we work and communicate anymore—it's fundamentally changing global energy dynamics. What started as a computational challenge has evolved into an infrastructure crisis that rivals the energy needs of entire nations. The numbers coming out of OpenAI's long-term planning documents paint a picture that's hard to wrap your head around, and they're forcing us to reconsider what powering the AI revolution actually means.

The Growth Trajectory Nobody Saw Coming

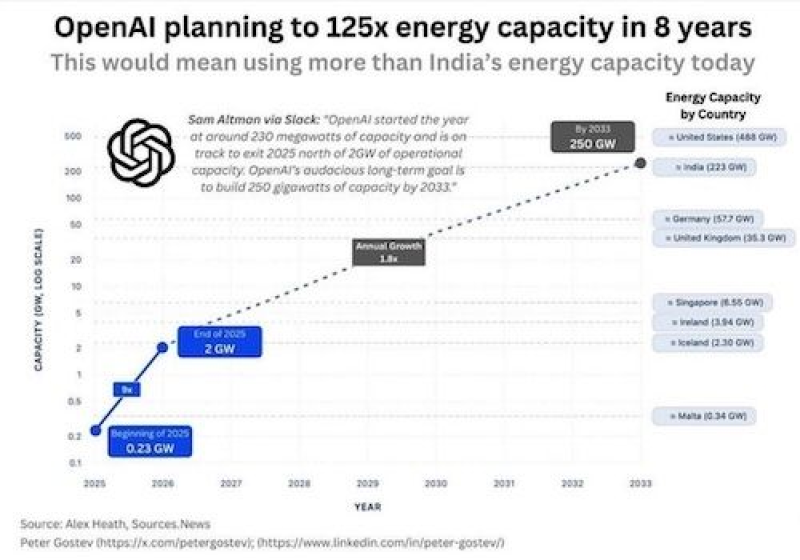

According to a recent analysis by Lukas Ekwueme, OpenAI is planning something unprecedented: expanding its electricity consumption by 125 times over the next eight years. We're talking about reaching 250 gigawatts of capacity by 2033. That's not just a big number—it's more power than India currently generates, and it leaves countries like Germany and the UK in the dust. This isn't incremental growth. This is a complete reimagining of what a single organization's energy footprint can look like.

Let's break down what OpenAI's expansion actually looks like. At the start of 2023, they were running on about 0.23 gigawatts. By the end of 2025, they're targeting 2 gigawatts—already a nearly tenfold increase. But that's just the warm-up. The real jump happens between now and 2033, when they're projecting they'll need 250 gigawatts to keep their systems running. This kind of exponential growth is basically unheard of in infrastructure development. We're watching one of the fastest buildouts in history unfold in real time.

What 250 Gigawatts Actually Means in the Real World

Numbers that big tend to lose meaning, so here's what it would take to actually generate that much power. You'd need roughly 250 nuclear reactors—basically building a new plant every couple of weeks for eight years straight. Or if you wanted to go the fossil fuel route, you're looking at around 357 massive natural gas facilities. Prefer renewables? Great, but you'd need about 23,000 square kilometers of solar panels, which is roughly the entire land area of New Jersey. Wind power? That'd require around 202,000 square kilometers of turbines—double the size of Nebraska. And remember, this is just for one company. When you factor in Google, Anthropic, Meta, and everyone else racing to build bigger models, the total energy picture gets even more intense.

Why AI Eats Energy for Breakfast

So why does artificial intelligence need so much power in the first place? It all comes down to the models themselves. Modern large language models and AI systems are incredibly complex, with billions or even trillions of parameters that need constant computation. Training these models from scratch requires massive parallel processing across thousands of GPUs running simultaneously for weeks or months. But training is just the beginning—once these models are deployed and handling millions of queries every day, the ongoing operational costs are enormous. Every conversation you have with ChatGPT, every image generated, every piece of code written by an AI assistant requires real computational work happening in data centers somewhere. As companies push toward even more capable systems and inch closer to artificial general intelligence, those computational demands only multiply. What we're seeing isn't just OpenAI's appetite—it's symptomatic of where the entire industry is headed. Google DeepMind, Anthropic, xAI, and others are all scaling up simultaneously, creating what amounts to an energy arms race where access to power becomes just as critical as access to talent or capital.

Usman Salis

Usman Salis

Usman Salis

Usman Salis