OpenAI has introduced a major benchmark for testing how AI models handle India's linguistic and cultural diversity. The IndQA dataset evaluates model performance on Indian languages, regional nuances, and culturally grounded questions.

What IndQA Measures

According to AshutoshShrivastava, a platform tracking AI developments, OpenAI launched IndQA (Indian Question Answering) to measure AI models' ability to understand and answer questions in Indian languages, including code-mixed and culturally specific contexts. The evaluation tests contextual reasoning, local knowledge, and linguistic nuance rather than just grammar or vocabulary.

IndQA covers 11 Indian languages including Hindi, Gujarati, Tamil, Bengali, Telugu, Malayalam, and Hinglish, spans 10 cultural domains such as law, food, literature, media, and religion, and uses culturally realistic phrasing with idioms and region-specific logic.

This makes it one of the first large-scale tests focused on AI localization for India, where language diversity and code-switching pose complex challenges.

GPT-5 Leads in Indian Language Performance

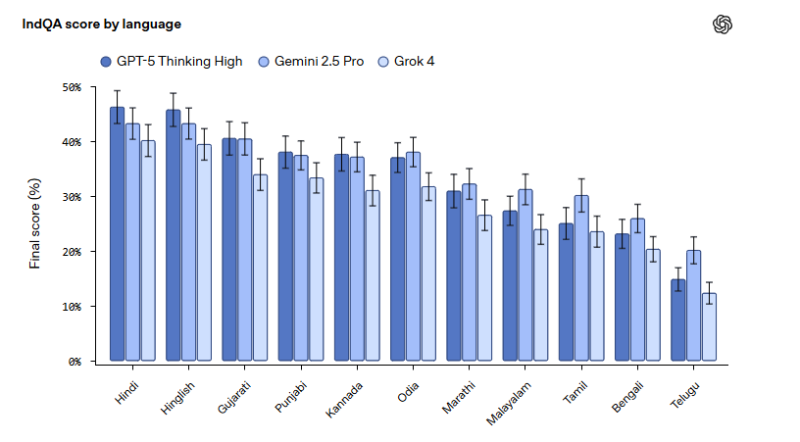

The IndQA scores show clear differences among GPT-5 Thinking High, Gemini 2.5 Pro, and Grok 4. GPT-5 Thinking High achieved the top position across nearly all 11 languages, scoring close to 48% in Hindi, Hinglish, and Gujarati. Gemini 2.5 Pro followed with consistent performance in the low-40% range.

Grok 4 trailed with lower accuracy, particularly in Bengali, Tamil, and Telugu, where scores fell near 20–25%. The data suggests GPT-5's multilingual pre-training has significantly improved its handling of Indian syntax and semantics.

Domain-Wise Performance Reveals Cultural Strengths

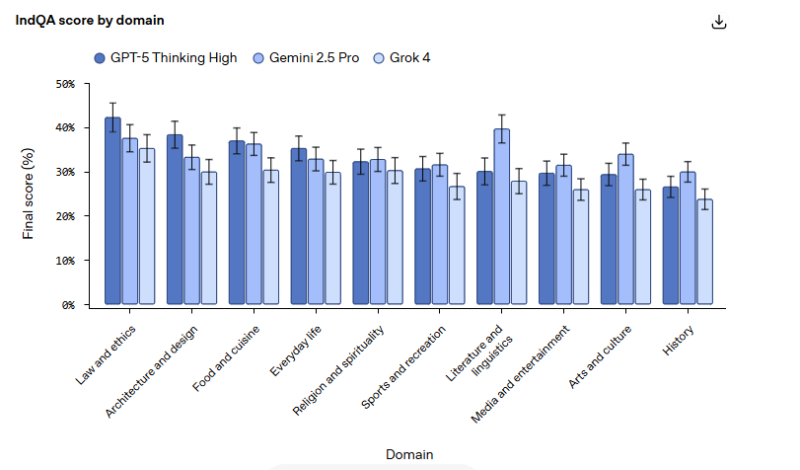

Domain-wise IndQA scores show GPT-5 Thinking High with the strongest overall results. It performs best in Law and Ethics, Food and Cuisine, and Literature and Linguistics, reaching around 45% accuracy. Gemini 2.5 Pro performs competitively in Religion and Spirituality and Media and Entertainment. Grok 4 maintains relative steadiness in Everyday Life and Sports but lacks deeper cultural inference. These results show GPT-5 dominates both linguistically and contextually in OpenAI's most complex benchmark yet.

Why IndQA Matters for Global AI

India represents one of the world's largest potential AI user bases with over 1.4 billion people and 22 official languages. Yet most large language models have been trained primarily on English or Western data. By releasing IndQA, OpenAI sets a precedent for culturally inclusive benchmarking, measuring real understanding rather than just translation accuracy. This could encourage localized training data collection for underrepresented languages, support multilingual AI projects in education and governance, and benchmark cultural context in future AI policy frameworks.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi