The AI landscape is shifting. Recent OpenRouter data reveals a new frontrunner in the race for developer adoption. xAI's Grok Code Fast 1 has climbed past industry giants - OpenAI's ChatGPT (GPT-5), Google's Gemini, and Anthropic's Claude - to claim the number one spot in both programming tasks and total token usage. For those building software, this represents a meaningful change in how AI models are being chosen and used.

Grok Takes the Lead in Programming

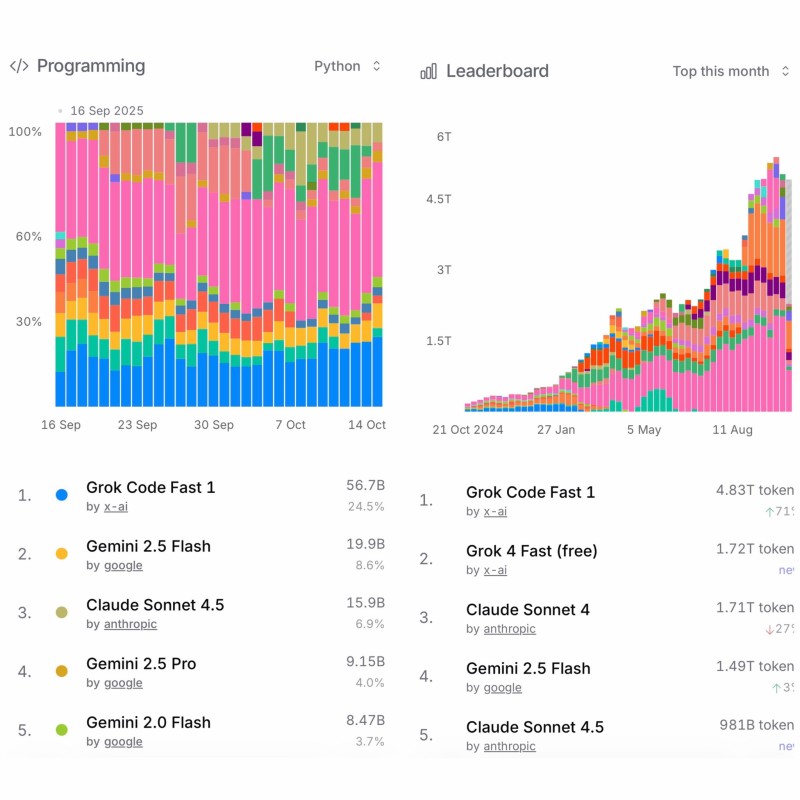

According to prominent crypto trader DogeDesigner, who shared the latest OpenRouter charts, Grok's performance in Python programming workloads tells a compelling story. With 56.7 billion tokens processed and a 24.5% market share, Grok Code Fast 1 stands well ahead of its competition.

Gemini 2.5 Flash follows in second with 19.9 billion tokens (8.6%), while Claude Sonnet 4.5 holds third place at 15.9 billion tokens (6.9%). Gemini 2.5 Pro and Gemini 2.0 Flash round out the top five with 9.15 billion and 8.47 billion tokens respectively. The gap between first and second place is substantial, highlighting Grok's edge in coding efficiency.

Total Token Usage Shows Strong Momentum

The overall token usage numbers reinforce Grok's dominance. Grok Code Fast 1 has processed 4.83 trillion tokens, far outpacing its nearest competitors. The free version, Grok 4 Fast, comes in second at 1.72 trillion tokens, followed closely by Claude Sonnet 4 with 1.71 trillion. Gemini 2.5 Flash has recorded 1.49 trillion tokens, while Claude Sonnet 4.5 sits at 981 billion. The usage chart's steep upward trajectory suggests developers are not just testing Grok - they're integrating it into their workflows at scale.

What's Driving Grok's Success

Three key factors appear to be behind this surge. First, Grok Code Fast was built specifically for software engineering, giving it a natural advantage in coding tasks. Second, offering both free and paid tiers has lowered the barrier to entry, attracting a wider range of users from hobbyists to enterprise teams. Third, instead of competing purely on model size, xAI focused on speed, cost, and practical usability - qualities that matter most to developers shipping real products. This approach reflects a broader industry shift where specialized, efficient models are proving more valuable than massive general-purpose systems.

What This Means for AI Competition

For xAI, these results validate a strategic bet on lean, task-focused architecture. For the wider industry, it marks a potential turning point. Developer adoption and real-world performance are starting to matter more than brand recognition or parameter counts. This could force OpenAI, Google, and Anthropic to rethink their approaches, while giving newcomers like xAI room to carve out significant market share. The competition is no longer just about who can build the biggest model - it's about who can build the most useful one.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov