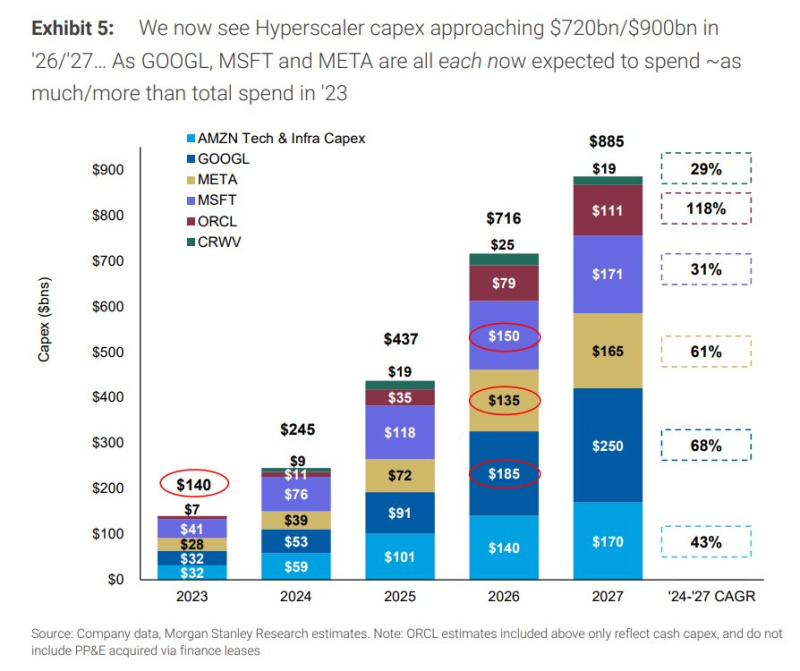

⬤ The world's biggest cloud providers are getting ready to unleash a spending spree that'll reshape the entire AI chip landscape. Hyperscaler capital expenditure is tracking toward $885 billion by 2027, and most of that money's flowing straight into semiconductors built for AI workloads.

⬤ The numbers tell a wild story. Total hyperscaler capex jumped from around $140 billion in 2023 to roughly $245 billion in 2024. Next year? We're looking at $437 billion. Then it explodes to $716 billion in 2026 before hitting that $885 billion peak in 2027. Amazon, Google, Meta, and Microsoft are all opening their wallets wider than ever before.

AI workloads are expected to shift toward inference after 2028, an area where AMD is described as having an advantage due to higher memory capacity in its chips.

⬤ Here's where it gets interesting for AMD. When AI workloads pivot toward inference after 2028, AMD's chips with their beefier memory capacity could steal serious market share. Some analysts are already penciling in valuations near 18 times 2027 earnings for the chipmaker.

⬤ This infrastructure buildout isn't just big — it's historic. The sheer scale of investment shows how seriously hyperscalers are taking the AI race, and it's going to reshape semiconductor demand and data center strategy for years to come.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah