AI commentator Dan Mac revealed that Anthropic is dropping Sonnet 4.5 this week, calling it the company's first real success with scaled reinforcement learning in post-training. It's the same playbook OpenAI used for GPT-5, and it marks a turning point: the era of just throwing more compute at models is over.

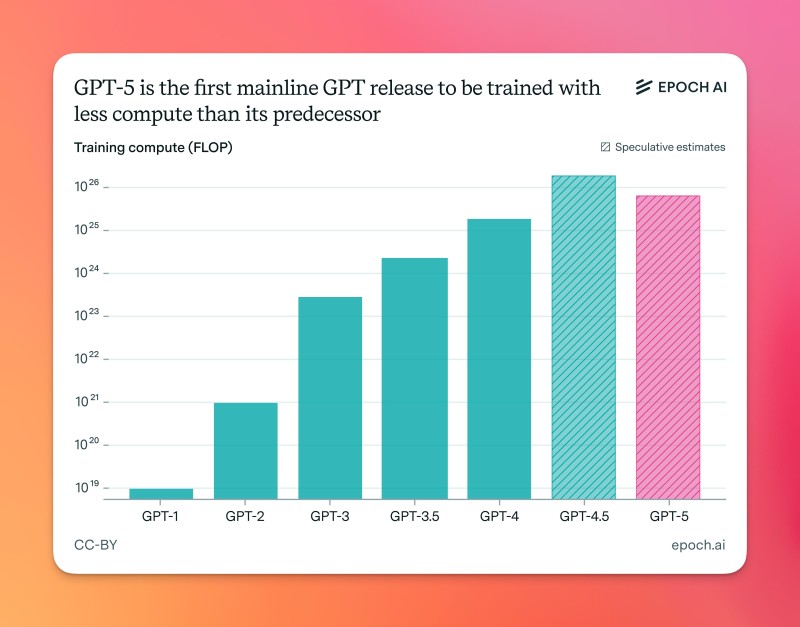

The Compute Plateau

Data from Epoch AI tells the story clearly. GPT-5 is the first mainline GPT model trained with less compute than what came before it. Every model from GPT-1 to GPT-4 rode an exponential compute curve upward. But GPT-5 breaks that pattern. The message is clear—efficiency and smarter techniques now matter more than brute force. Anthropic's Sonnet 4.5 follows the exact same philosophy, betting on scalable RL post-training to make models sharper and better aligned without burning through resources.

What Sonnet 4.5 Brings to the Table

According to the reports, Sonnet 4.5 is expected to dominate on SWE-Bench and Terminal-Bench, two benchmarks that test software engineering chops and command-line skills. It's also supposed to handle 3-hour tasks on METR Long Duration, which measures sustained reasoning—something most LLMs still struggle with. And here's the kicker: it'll match GPT-5 on price, putting Claude squarely in competition with OpenAI for enterprise and developer budgets. If these numbers hold up, Anthropic isn't just keeping pace—it's throwing down.

Why This Approach Works

For years, better AI meant bigger training runs. But as the Epoch AI chart shows, that road is hitting a wall. GPT-5 used less compute than GPT-4.5, proving that smarter methods beat bigger budgets. Scalable RL post-training is the new edge. For Anthropic, this means higher performance without spiraling costs, better instruction-following over long sessions, and the ability to go toe-to-toe with GPT-5 while keeping prices competitive. It's a fundamental shift in how top-tier models get built.

What It Means for Everyone Else

If Sonnet 4.5 delivers, the ripple effects will be huge. Enterprises could get GPT-5-level performance across more price tiers, which opens doors for smaller companies. Competition heats up fast when Anthropic pushes this hard—OpenAI, Google, and others will have to move faster. And the real unlock? Models that can reason for hours instead of minutes suddenly make new use cases viable: deep research, complex automation, and software development tasks that were off the table before.

Peter Smith

Peter Smith

Peter Smith

Peter Smith