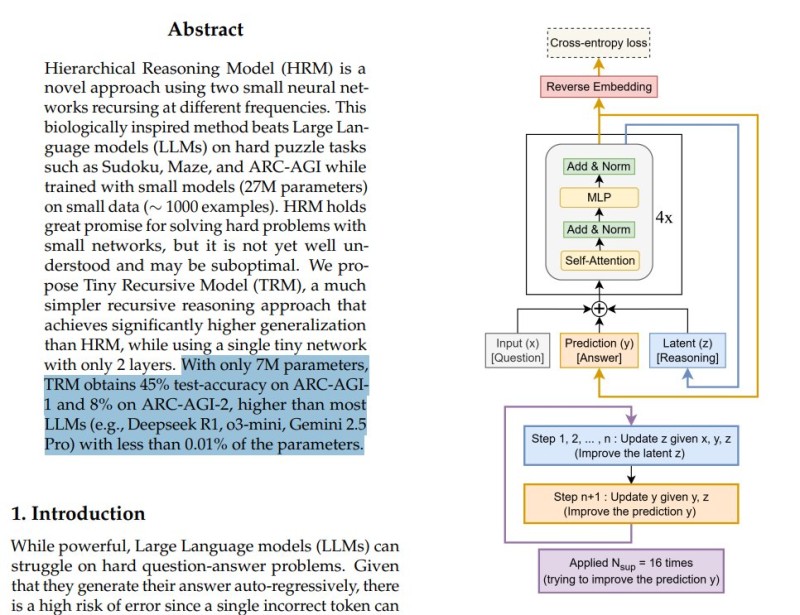

Recent research is flipping conventional AI wisdom on its head. A paper titled "Less Is More: Recursive Reasoning with Tiny Networks" introduces the Tiny Recursive Model (TRM) - a system with just 7 million parameters that outperforms models thousands of times its size. This finding questions whether massive scale is really necessary for AI progress.

Small but Mighty

Trader Haider highlighted this breakthrough, noting how TRM achieved 45% accuracy on ARC-AGI-1 and 8% on ARC-AGI-2.

Meanwhile, giants like DeepSeek R1, Q3-mini, and Gemini 2.5 Pro couldn't match these numbers despite having exponentially more parameters. TRM does this with less than 0.01% of typical LLM resources.

How It Works

TRM uses recursive reasoning - repeatedly refining its internal logic and updating predictions in an iterative loop. Instead of throwing more compute power at problems, it thinks through them step by step, strengthening answers with each pass. This approach delivers strong results without massive infrastructure.

Why This Matters

This isn't just academic curiosity. Tiny models cost less to train and run, consume far less energy, and could work on everyday devices rather than requiring massive data centers. It's a practical shift that makes advanced AI more accessible and sustainable. The research suggests efficiency might matter more than raw size, potentially redirecting how major labs approach future development. If recursive models keep proving themselves, we might see companies rethink their strategies around model architecture rather than simply building bigger systems.

Usman Salis

Usman Salis

Usman Salis

Usman Salis