The AI world is shifting gears. It's not just about building the biggest models anymore—efficiency is becoming just as critical. Alibaba's latest release proves this point beautifully: their compact Qwen3-VL models manage to punch well above their weight class, matching or beating competitors that should theoretically have the advantage. What's particularly striking is how these smaller systems rival performance that required 72 billion parameters just six months ago.

Compact Models, Serious Performance

Alibaba recently unveiled the smaller versions of its multimodal AI model through a tweet from Qwen. The Qwen3-VL lineup now includes 4B and 8B parameter configurations, each available in both "Instruct" and "Thinking" variants.

These models keep all the multimodal capabilities you'd expect from the Qwen family while dramatically reducing memory requirements. There's even FP8 versions for teams working with tighter hardware budgets, making deployment much more accessible for businesses that can't afford massive infrastructure investments.

How They Stack Up Against the Competition

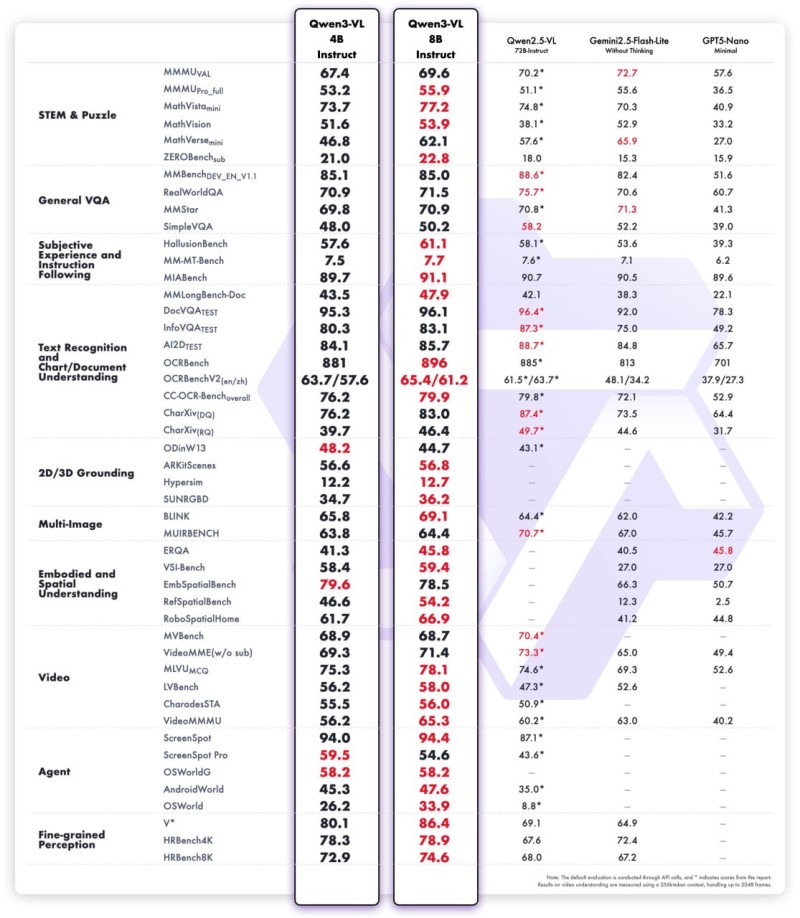

The benchmark results tell an interesting story:

- STEM and puzzle-solving tasks: The 8B model hit 77.2 on MathVistaMain—comfortably ahead of Gemini 2.5 Flash Lite's 70.3 and miles beyond GPT-5 Nano's 39.0.

- General visual question answering: It scored 85.0 on RealWorldQA compared to Gemini's 82.4 and GPT-5 Nano's 51.6.

- OCR and document tasks: Achieved 89.6 on AI2DTest versus Gemini's 84.8, showing strong text recognition capabilities.

- Video understanding: Reached 78.1 on MVUCoA against Gemini's 69.3, demonstrating solid temporal reasoning.

- Agent-based challenges: Scored 52.6 on OSWorldG, beating Gemini's 43.6 in complex interactive tasks.

What really catches your attention is seeing these compact models perform at levels that matched Alibaba's own 72B flagship from earlier this year.

Why This Matters

There's a practical reason why everyone's excited about efficient models lately. Sure, systems like GPT-4 and Gemini Ultra dominate the raw performance charts, but they also need data center-level resources to run. Qwen3-VL offers something different: strong reasoning and multimodal understanding without the massive infrastructure bill. That makes it actually viable for regular businesses, consumer apps, and edge computing scenarios where you can't just throw unlimited servers at every problem.

What This Means for the Industry

This release signals some meaningful shifts in how AI development is evolving. First, it shows Chinese AI labs are becoming serious competitors on the global stage, matching or exceeding what Google and OpenAI offer in several categories. Second, the focus on FP8 support and lower memory usage shows these teams are thinking about real-world deployment, not just benchmark bragging rights. Perhaps most importantly, the fact that a compact model today matches what required 72 billion parameters half a year ago demonstrates just how quickly this field is moving

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah