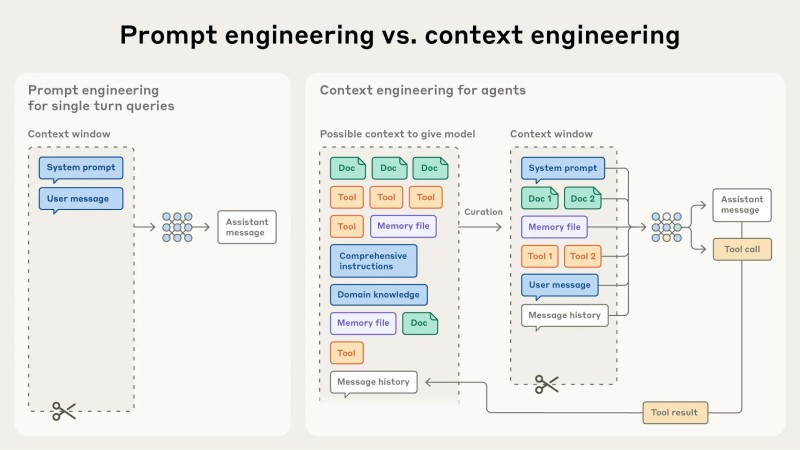

The AI playbook just got rewritten. While developers have spent years perfecting "prompt engineering" - tweaking questions and instructions to get better outputs - Anthropic's new research introduces something more powerful: context engineering.

Why Prompts Aren't Enough Anymore

And according to Elvis, who called it "another banger," the section comparing these two approaches is essential reading. This isn't just academic theory. It's a fundamental shift in how we'll build with AI going forward.

Prompt engineering had a good run. It democratized AI by showing that how you ask matters as much as what you ask. But anyone who's worked with models knows the frustration - what works today breaks tomorrow, and you're constantly tweaking and testing. Anthropic's paper cuts through this. Context engineering isn't about crafting the perfect question. It's about designing the entire environment the model operates in: system instructions, role definitions, conversation memory, and dialogue structure. Think of it as building the stage, not just reading the script. The result? More consistent outputs, better alignment, and actual predictability.

What Makes Context Engineering Different

Here's what context engineering brings to the table:

- Reliability - Structured guidance means less randomness and fewer surprises

- Safety - Built-in guardrails prevent harmful or biased outputs from the start

- Scalability - Handles complex workflows and long conversations without falling apart

This matters especially now that AI is moving into high-stakes territory like healthcare, finance, and enterprise systems where "close enough" doesn't cut it.

Anthropic isn't just publishing research - they're setting the standard. Their Claude models have always emphasized safety and reliability over raw performance, and this paper extends that philosophy to how developers should work with AI. We're moving from the era of viral "prompt hacks" on Twitter to systematic, professional AI design. Context engineering is becoming what data preprocessing became for machine learning: not optional if you're serious about building real applications.

The bar just moved. Knowing how to write clever prompts won't be enough anymore. Developers need to think like architects - designing robust contexts that set clear boundaries, provide structure, and keep AI aligned with actual goals. This isn't a nice-to-have skill. It's becoming foundational, and anyone building production AI systems needs to pay attention.

Usman Salis

Usman Salis

Usman Salis

Usman Salis