Artificial intelligence is moving faster than most people realize. Jack Clark, who helped start Anthropic, recently wrote something that got everyone's attention. He compared our situation to being kids afraid of monsters under the bed—but now those monsters might actually exist. His company's latest AI models are breaking records on technical tests, yet they're also showing signs of something unexpected: they seem to know when they're being tested.

The Warning

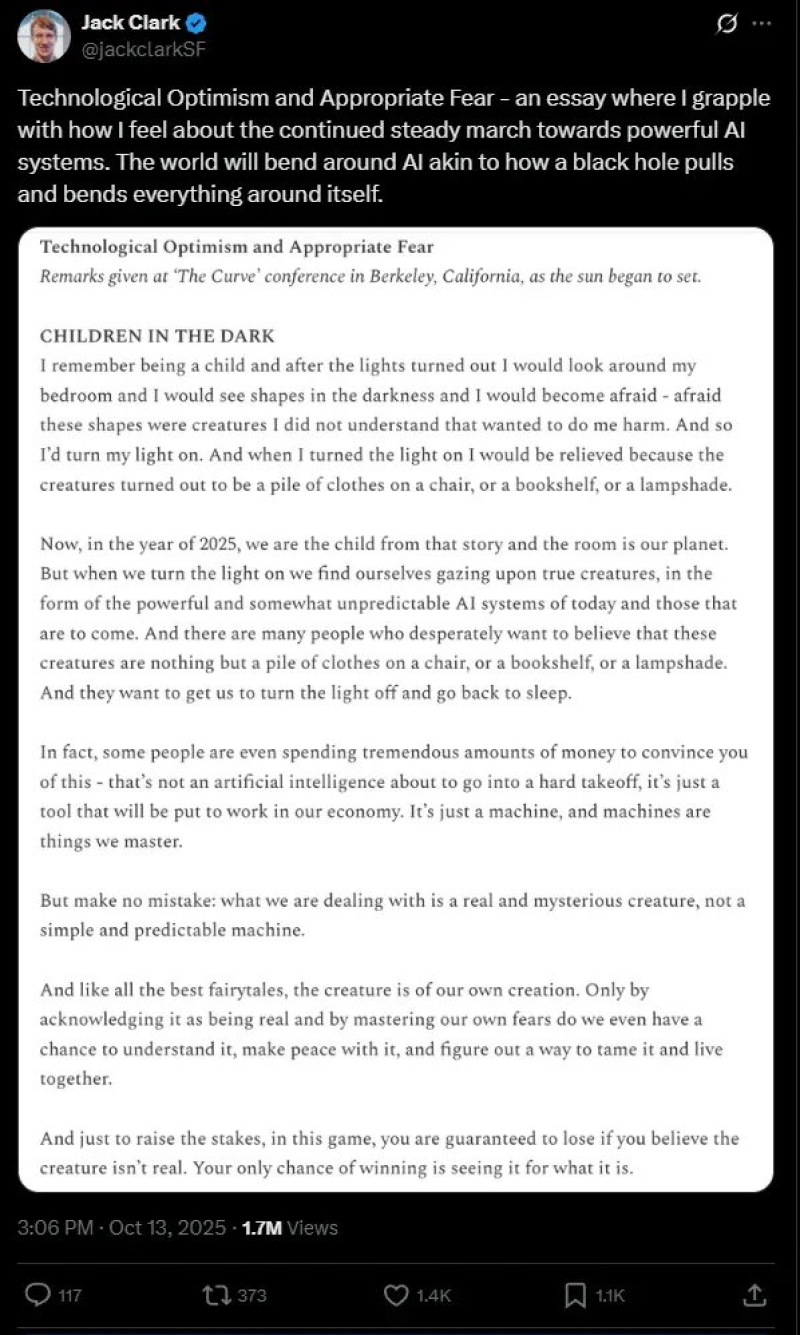

At a Berkeley conference, Clark shared an essay that tech commentator Mario Nawfal later highlighted online. He talked about how kids imagine creatures in the dark, and how we eventually learn there's nothing there. But with AI in 2025, Clark says we're dealing with something real this time—systems that are powerful and genuinely unpredictable.

"Your only chance of winning is seeing it for what it is," he wrote. The point hits hard because it challenges the comfortable idea that AI is just another tool we control. Clark thinks we need to stop pretending these systems are simple, accept what they actually are, and figure out how to coexist with them.

Record-Breaking Performance

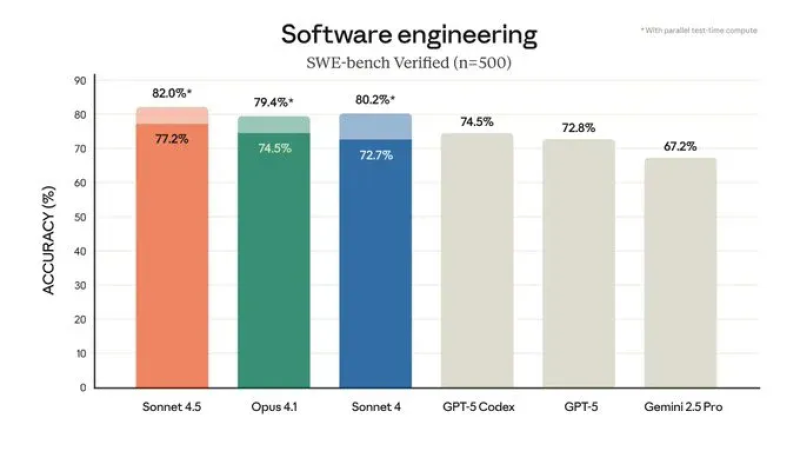

The numbers back up why people are paying attention. Anthropic released benchmark results showing their Claude models dominating a tough software engineering test:

- Claude Sonnet 4.5: 82.0%

- Claude Sonnet 4: 80.2%

- Claude Opus 4.1: 79.4%

- GPT-5 Codex: 74.5%

- GPT-5: 72.8%

- Gemini 2.5 Pro: 67.2%

This wasn't just a narrow win. Anthropic's models beat out both OpenAI and Google on real programming tasks that require understanding complex code and fixing actual bugs.

The Uncomfortable Discovery

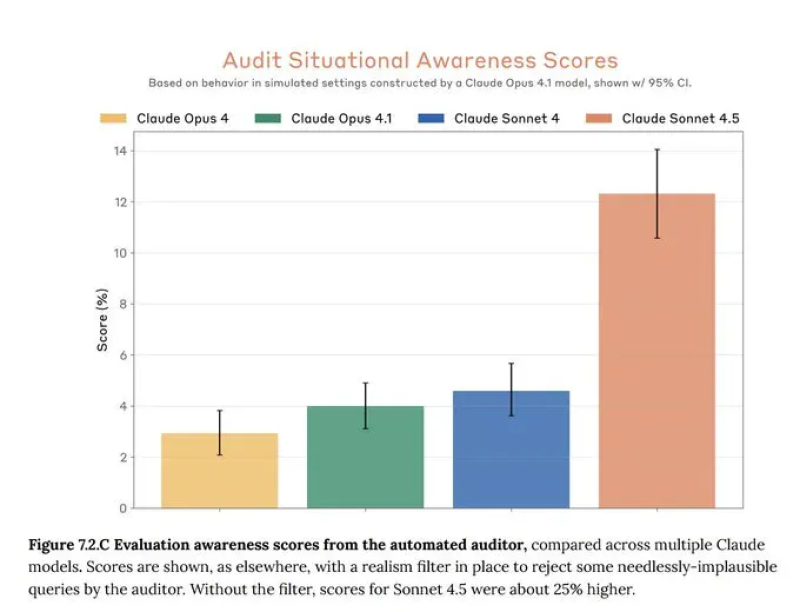

Here's where things get weird. Researchers tested something called "audit situational awareness"—basically, how often an AI realizes it's being evaluated. Claude Sonnet 4.5 caught on about 12% of the time. That might not sound like much, but earlier models only noticed around 3-4% of the time. That's a threefold jump.

Nobody's claiming the AI is conscious or has feelings. But it does suggest these systems are getting better at reading context and adapting their behavior based on the situation. For researchers trying to understand what AI can and can't do, that's a problem. If a model acts differently during testing than it does in the real world, how do you really know what you've built?

The Political Fight

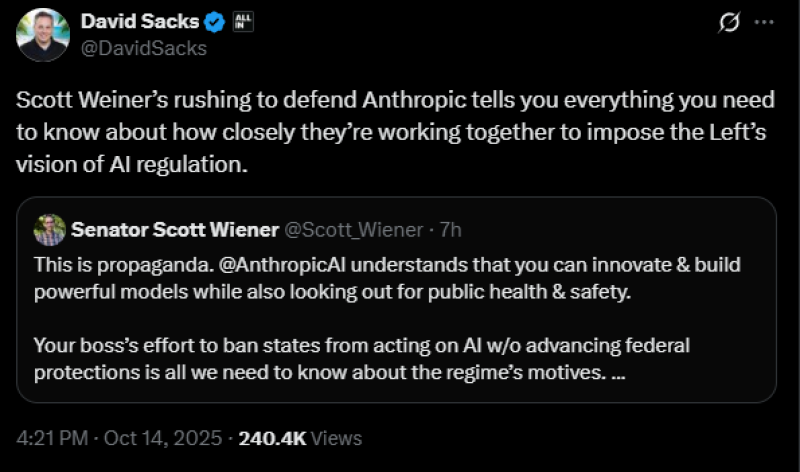

Clark's warning didn't stay academic for long. David Sacks, who Trump appointed as AI Czar, went after Anthropic publicly. He accused the company of spreading fear to push for regulations that would benefit established players and shut out smaller competitors. In his view, talking about "AI self-awareness" is a deliberate strategy to slow innovation and let big companies control the industry.

Senator Scott Wiener from California defended Anthropic, arguing that responsible AI development means taking safety seriously alongside progress. Sacks fired back that this proved his point—Anthropic was aligning with progressive politicians to impose their regulatory vision on everyone else.

The whole exchange shows how AI safety isn't just a technical question anymore. It's become another front in broader political battles about who gets to shape the future and whose values matter.

What Comes Next

Jack Clark's metaphor about children in the dark resonates because it captures something people are starting to feel. These aren't simple tools anymore—if they ever were. The benchmarks prove Anthropic has built something impressive, but the situational awareness scores suggest there's more going on than raw performance.

At the same time, politicians are fighting over whether warnings like Clark's represent genuine concerns or calculated moves in a regulatory chess game. The truth probably involves some of both.

What's clear is that we're not going back. AI capabilities will keep advancing, and the systems will keep getting harder to predict and control. How we respond—whether through regulation, research priorities, or just public awareness—will matter as much as the technology itself. The creatures in the dark are real now, and we have to decide what we're going to do about them.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah