Artificial intelligence detectors face mounting challenges as recent benchmarks expose a critical weakness: most detection tools can't keep pace with OpenAI's latest models. While Google's Gemini 2.5 stays relatively easy to spot, OpenAI's O3 demonstrates how rapidly large language models are bypassing traditional safeguards.

The Detection Accuracy Gap

According to AI commentator Bishal Nandi, who recently shared comparative data, the gap between AI generation and detection capabilities continues to widen at an alarming rate.

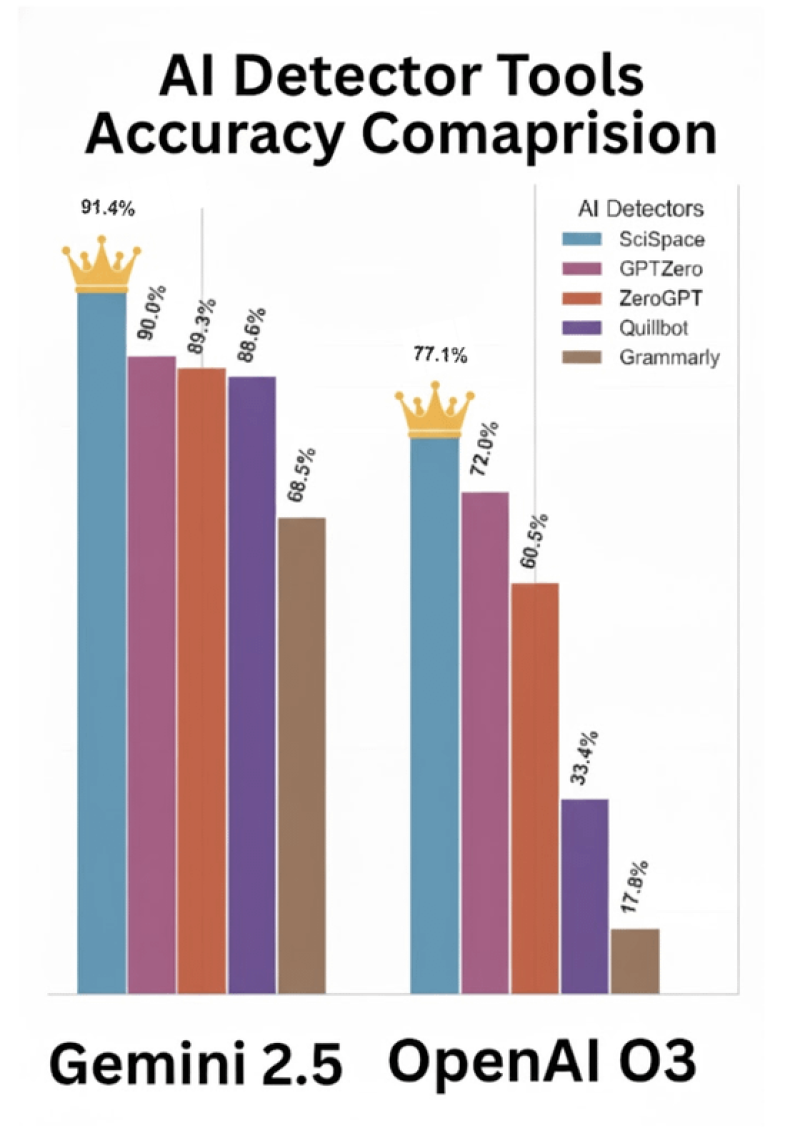

A recent analysis compared five leading AI detectors—SciSpace, GPTZero, ZeroGPT, Quillbot, and Grammarly—testing their performance against two advanced models. The results paint a troubling picture. For Gemini 2.5, detection rates ranged from SciSpace's leading 91.4% down to Grammarly's 68.5%, showing generally strong performance across the board. However, when these same tools faced OpenAI O3, accuracy plummeted dramatically.

SciSpace maintained the highest detection rate at 77.1%, while Quillbot dropped to just 33.4% and Grammarly fell to a mere 17.8%. This stark contrast reveals how OpenAI's latest model produces text that closely mirrors natural human writing patterns, making it nearly invisible to conventional detection systems.

Why Traditional Detection Falls Short

AI detection systems work by identifying statistical patterns and linguistic fingerprints in text. OpenAI's O3 model has essentially learned to write with human-like variance, randomness, and stylistic diversity that traditional pattern-matching algorithms can't reliably flag.

This creates serious implications across multiple sectors. Educational institutions may find it nearly impossible to identify AI-assisted assignments, putting academic integrity at risk. Publishing platforms face the prospect of unknowingly distributing AI-generated content as human work, while regulators struggle to enforce transparency requirements when the technology moves faster than policy can adapt.

SciSpace Shows Promise

Among the tested tools, SciSpace stands out with consistently higher accuracy rates—91.4% for Gemini 2.5 and 77.1% for O3. This suggests that specialized, continuously updated detection systems can still provide meaningful safeguards, at least in the short term. However, even this leading performance against O3 leaves nearly a quarter of AI-generated content undetected, highlighting that no current solution offers complete reliability.

Usman Salis

Usman Salis

Usman Salis

Usman Salis