Artificial intelligence is advancing faster than almost any industry in modern history, and the infrastructure needed to support it is racing to keep up. Recent data from Melius, highlighted by Holger Zschaepitz, reveals just how dramatically compute requirements are increasing—and suggests that concerns about an AI bubble might be missing the bigger picture.

The Compute Curve: From Chatbots to Deep Research

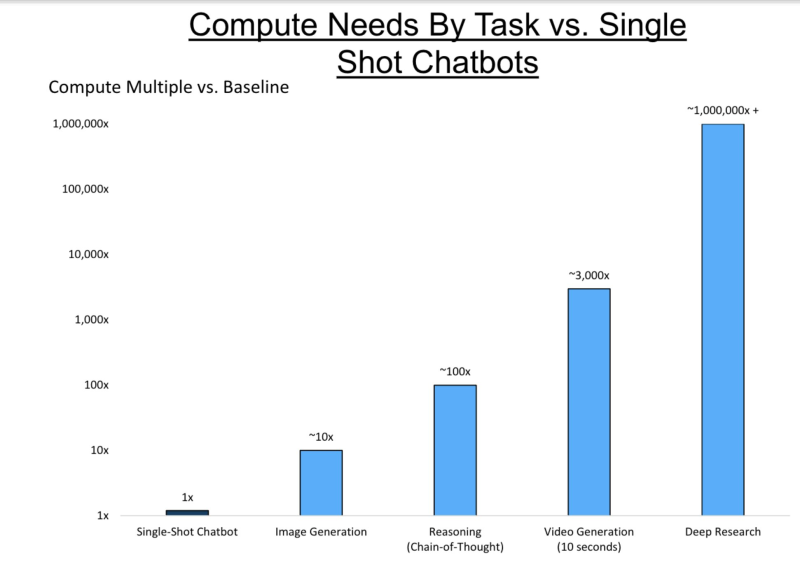

When you compare a basic chatbot to more sophisticated AI tasks, the difference in computational power is staggering. Image generation needs roughly 10 times more processing power than a simple chat response. Reasoning tasks that involve chain-of-thought processing require about 100 times more. Generating just 10 seconds of video demands approximately 3,000 times the baseline compute. And deep research systems? They're operating at over a million times the computational intensity of that first chatbot interaction.

This isn't a gradual increase—it's exponential. Each new capability layer, whether it's reasoning, multimodal processing, or autonomous decision-making, requires infrastructure expansion on a scale we haven't seen before.

OpenAI's 250 GW Vision

OpenAI is looking at around 250 gigawatts of power capacity for upcoming AI workloads. To put that in context, only about 23 gigawatts have been officially announced across all global AI data center projects combined. That massive gap shows how far behind current infrastructure is compared to where demand is heading. Technologies like Sora for AI video, along with Pulse and AgentKit, are pushing compute needs into territory that existing systems weren't designed to handle.

Why the Fundamentals Are Different

Yes, AI company valuations have climbed dramatically, and yes, that often triggers bubble warnings. But the underlying demand tells a different story. The need for compute power isn't speculative—it's measurable, it's growing, and it's outpacing what's currently available. Think about how the internet required massive infrastructure investment before it could reach mainstream adoption. AI is going through something similar, except compressed into a much shorter timeframe. The build-out is happening at hyperspeed.

The companies positioned to benefit are obvious: chipmakers like Nvidia, AMD, and Broadcom; hyperscalers like Microsoft, Google, and Amazon racing to expand their cloud and data center footprints; and specialized infrastructure firms betting on high-efficiency chips and renewable energy solutions.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah