Building cutting-edge AI isn't just about launching the next version of ChatGPT. It's about the countless experiments happening behind closed doors that never make it into the headlines. Research from Epoch AI recently shed light on OpenAI's massive $7 billion compute budget for 2024, revealing something surprising: most of that money didn't go toward training the models we actually use. Instead, it funded a sprawling web of experiments, failed prototypes, and exploratory research that form the invisible backbone of AI progress.

The Numbers Tell a Story

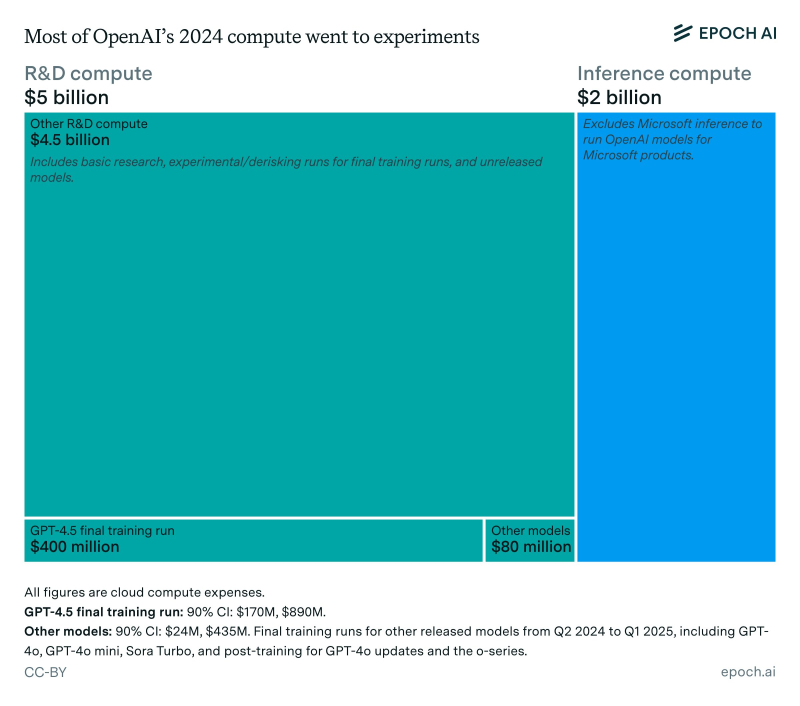

Epoch AI's breakdown shows where OpenAI's compute dollars actually went:

- Total spend: ~$7 billion

- R&D experiments: $5 billion (including $4.5B on basic research, experimental runs, and unreleased models; plus $400M on GPT-4.5's final training and $80M on other models)

- Inference costs: $2 billion (not counting Microsoft's separate inference infrastructure)

The takeaway? Over 70% of OpenAI's compute budget went to experimentation. Only a fraction trained the models that reached users.

Why So Much Goes to Experiments

This isn't wasteful spending—it's how frontier AI actually works. Before OpenAI commits hundreds of millions to a final training run, it needs to validate countless architectural choices, training strategies, and scaling decisions. That means running smaller versions, testing different approaches, and learning from models that never ship. Some experiments reduce risk for future billion-dollar bets. Others explore ideas that might not pan out for years, if ever. And many simply fail—but each failure teaches something valuable. Microsoft's partnership also plays a role here, handling inference for its own products and freeing OpenAI to double down on research.

What This Means for AI's Future

These spending patterns reveal uncomfortable truths about the AI industry. The barrier to entry keeps rising—few organizations can afford multi-billion-dollar compute budgets, concentrating power among a small number of well-funded players. Training costs are escalating fast: GPT-4.5's final run cost $400 million, and future flagship models could easily cross the $1 billion mark per training cycle. Perhaps most striking is how AI economics really work: the majority of capital goes toward paths that lead nowhere. Progress is cumulative but expensive, built on a foundation of expensive dead ends.

Peter Smith

Peter Smith

Peter Smith

Peter Smith