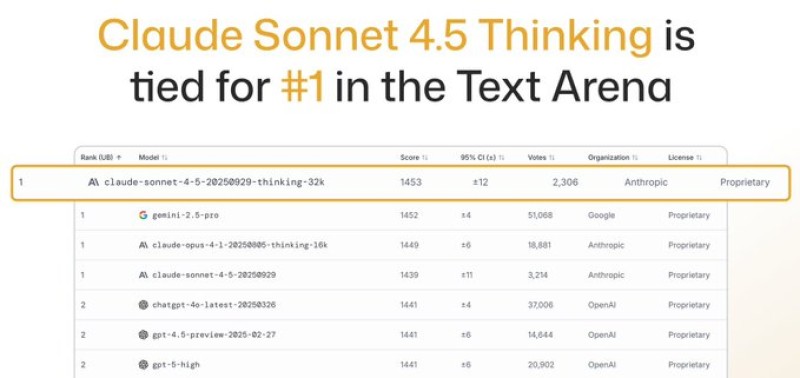

The AI landscape just got more competitive. Anthropic's Claude Sonnet 4.5 Thinking has officially tied for first place in the Text Arena rankings, a benchmark measuring real-world performance of large language models. This achievement marks a significant moment in the ongoing race between AI's biggest players. For the first time, we're seeing a genuine three-way battle at the summit, with Anthropic standing toe-to-toe with industry giants Google and OpenAI.

Anthropic's Model at the Top

This achievement, celebrated by the AI community and highlighted in a recent tweet by Lisan al Gaib with the latest leaderboard shows an incredibly tight race. Claude Sonnet 4.5 Thinking with its 32k context window scored 1453, securing the top position in a tie with Google's Gemini 2.5 Pro at 1452. Just behind them, Claude Opus 4.1 Thinking sits at 1449, while OpenAI's GPT-4 and experimental GPT-5 variants hover around 1440–1441.

Top performers: Claude Sonnet 4.5 Thinking (32k) at 1453, Gemini 2.5 Pro at 1452, Claude Opus 4.1 Thinking (16k) at 1449, and GPT-4/5 variants ranging from 1440 to 1441.

What's striking isn't just Claude's top placement, but how close these scores are. We're talking about differences of just a few points separating the world's most advanced language models.

Why It Matters

Claude's "Thinking" series is engineered for advanced reasoning and multi-step logic, excelling at complex research synthesis, sophisticated coding tasks, and nuanced professional problem-solving. This strong showing validates that Anthropic's architectural choices are working. The company has closed what was once a noticeable gap with established giants while maintaining their reputation for thoughtful AI safety practices.

We've shifted from a two-horse race between OpenAI and Google to a genuine three-way rivalry. This competition drives innovation, with each player pushing the others forward. We're likely to see more frequent model releases and aggressive feature additions. As these models are so closely matched, differentiation will increasingly come down to factors like pricing, API reliability, context windows, safety features, and workflow integration.

Usman Salis

Usman Salis

Usman Salis

Usman Salis