● Alex L Zhang recently shared news about Recursive Language Models (RLMs), a breakthrough from MIT CSAIL. This approach lets large language models break down massive inputs and work through them recursively in a REPL-style environment, essentially removing previous limits on how much context they can handle.

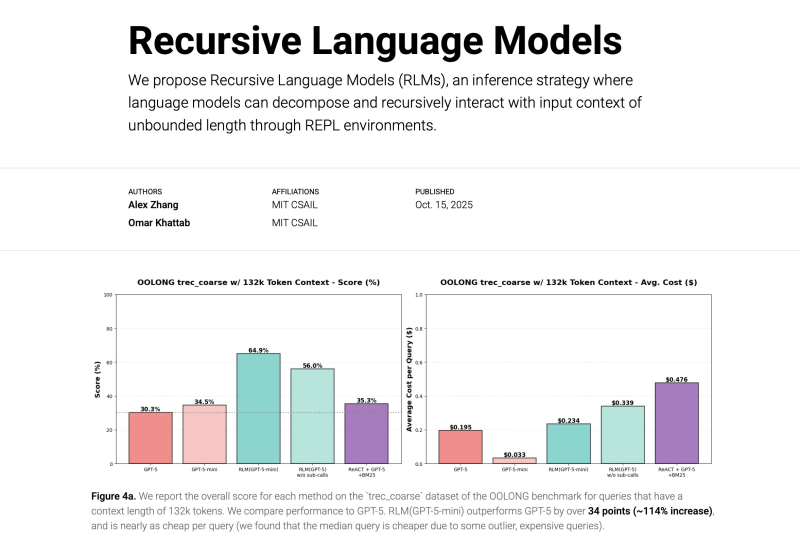

● The results are striking. On the OOLONG benchmark with 132k tokens, RLMs running on GPT-5-mini hit 64.9% accuracy versus GPT-5's 30.3% — more than doubling the performance. What's more, queries actually cost less on average, proving you don't need to blow your budget to scale up context windows.

● For businesses, this matters. Companies can now deploy powerful AI without massive infrastructure spending. Rather than just throwing more compute at the problem, RLMs show how smarter architecture delivers both scale and efficiency.

● The broader picture is even more impressive. Testing on BrowseComp-Plus showed RLMs with GPT-5 handling over 10 million tokens in a single prompt, answering complex compositional queries accurately and even beating retrieval-based systems. This could fundamentally change how we think about AI scalability and working with huge datasets.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah