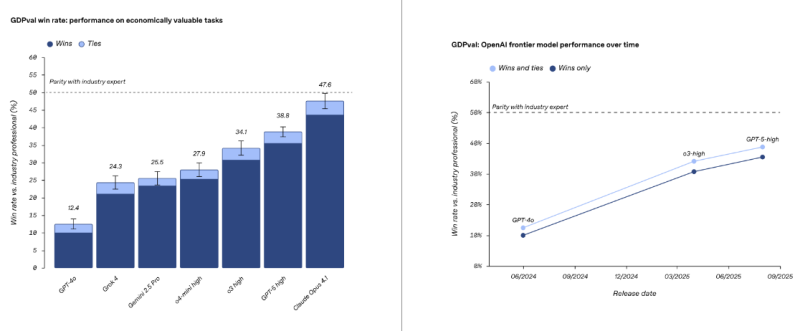

The AI landscape just got more competitive. According to a recent announcement from OpenAI, Claude Opus 4.1 has claimed the top position on GDPval, a benchmark that measures how well AI models match human expert performance. This development highlights both Anthropic's growing capabilities and the breakneck pace of AI advancement across the industry.

Claude Takes the Lead

Expert evaluators found that nearly half of Claude Opus 4.1's responses matched or exceeded expert-level quality, making it the strongest performer on GDPval. This represents a significant win for Anthropic as it competes with OpenAI, Google, and other major players in the large language model space.

Interestingly, OpenAI's own data revealed that their frontier models have nearly doubled their win rates in just one year, demonstrating the remarkable speed of progress happening across the field.

Understanding GDPval

Unlike benchmarks that focus on speed or computational scale, GDPval evaluates how closely AI-generated content matches the reasoning and quality standards of human experts. This makes it particularly valuable for professional applications in law, finance, healthcare, and research where accuracy and reliability are non-negotiable.

The improvements across multiple models suggest that AI systems are becoming genuinely more capable rather than simply faster or larger.

What This Means for the Industry

These results signal several important shifts. Anthropic's success with Claude Opus 4.1 establishes a new performance standard that will likely push competitors to enhance their own reasoning capabilities. The rapid improvement rates, particularly OpenAI's near-doubling of performance within twelve months, indicate an industry moving at unprecedented speed.

For businesses, benchmarks like GDPval build confidence in AI systems by demonstrating their ability to produce accurate, explainable, and consistent outputs at scale.

Looking Forward

The GDPval results suggest AI is entering a new phase where success is measured by expert-level reasoning rather than raw computational power. OpenAI's rapid progress shows they're quickly closing any competitive gaps, while Claude Opus 4.1's leadership demonstrates that Anthropic's emphasis on alignment and reasoning is delivering tangible results.

As the competition intensifies between these tech giants, we can expect even more impressive benchmarks and capabilities to emerge in the coming months.

Peter Smith

Peter Smith

Peter Smith

Peter Smith