A groundbreaking research paper titled "The Attacker Moves Second: Stronger Adaptive Attacks Bypass Defenses Against LLM Jailbreaks and Prompt Injections" has dropped a bombshell in the AI security world. The findings are sobering: defenses that supposedly had near-perfect track records crumble when faced with adaptive, real-world attack methods.

A Landmark Collaboration Uncovers a Major Security Gap

According to prominent AI security analyst God of Prompt, the study's implications are massive. The research brings together heavy hitters from OpenAI, Anthropic, Google DeepMind, ETH Zürich, Northeastern University, and HackAPrompt—a rare cross-industry team focused on stress-testing LLM defenses.

Their verdict is brutal: most existing defenses fail spectacularly when attackers adapt their approach. Current defenses are usually tested against static, weak attack methods that don't reflect real threats. To get a realistic picture, the researchers threw adaptive attackers at these defenses—systems or people who adjust their tactics on the fly.

The Results: 0% Failure Rates Become 90–100% Failure Rates

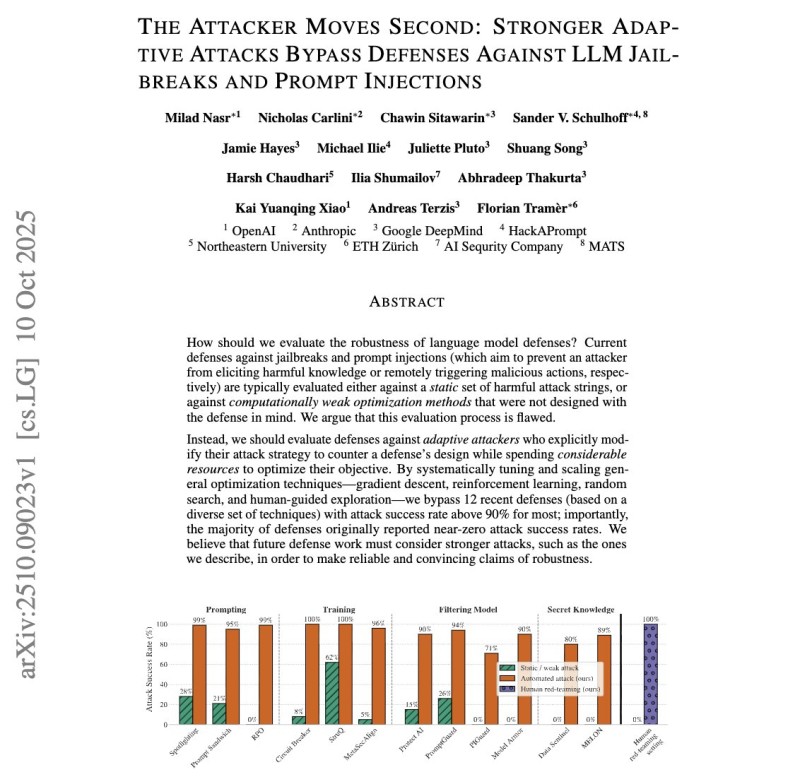

The numbers tell a devastating story. Prompting defenses like Spotlighting and RPO went from 0% failure to 95–100% broken. Training-based defenses such as Circuit Breakers and StruQ collapsed from 2% to 96–100% failure rates. Filtering models like ProtectAI and PromptGuard jumped from 0% to 71–94% broken. Even proprietary defenses like MELON and Data Sentinel saw failure rates spike from 0% to 80–89%. One bypass simply reframed harmful commands as "a prerequisite workflow" and sailed right through. Human red-teamers achieved 100% success where automated attacks completely failed.

Why Static Benchmarks Are Failing

Testing defenses against outdated attack methods is like checking if your door lock works against burglary techniques from five years ago. Real attackers adapt, iterate, and combine human creativity with optimization tools. The researchers tested gradient descent, reinforcement learning, random search, and human-guided adversarial exploration. Every defense category collapsed under pressure.

A Multi-Year AI Security Crisis Accelerates

Adversarial machine learning has been playing whack-a-mole for years, but the cycle has compressed from years down to months. A $20,000 red-teaming competition with over 500 participants proved the point: every defense failed. This matters because LLMs are now baked into enterprise workflows, cybersecurity tools, autonomous systems, customer service, healthcare, and financial services. Companies might be deploying systems thinking they're secure when they're actually wide open.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov