The AI coding landscape just got a major shakeup. xAI's Grok 4 Fast has claimed the crown in LiveCodeBench's coding benchmarks, scoring an impressive 83% and leaving established players scrambling to keep up. This breakthrough performance signals a significant shift in the competitive dynamics of developer-focused AI tools.

Benchmark Performance Overview

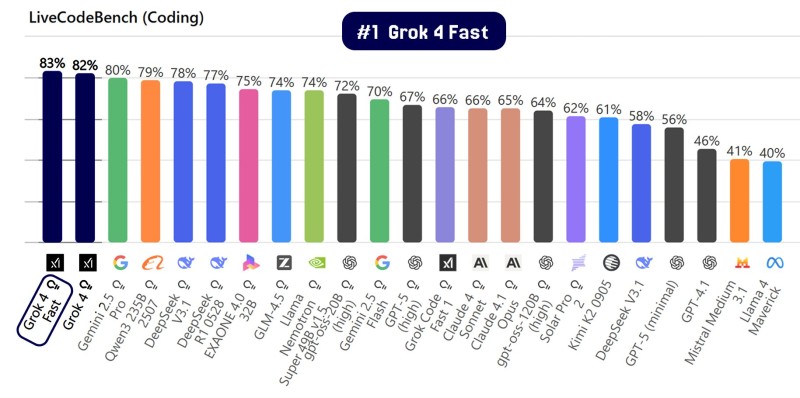

According to AI commentator X Freeze, the LiveCodeBench results reveal a clear hierarchy: Grok 4 Fast leads at 83%, followed closely by Grok 4 at 82%, Google's Gemini 2.5 Pro at 80%, Qwen3 25B at 79%, DeepSeek V3.1 at 78%, OpenAI's GPT-5 (high) at 70%, Claude Sonnet 4 at 65%, Mistral Medium at 41%, and Llama 4 Maverick at 40%.

The results are particularly striking given OpenAI's GPT-5 performance at just 70%, placing it well behind xAI's offerings and even trailing Google's Gemini and Alibaba's Qwen models. This represents a significant departure from previous benchmarks where OpenAI typically dominated.

Impact on the Developer Ecosystem

LiveCodeBench serves as a crucial metric for evaluating how well AI models handle real-world coding challenges, from debugging to code generation and optimization. Grok's strong showing suggests developers can expect more reliable outputs and better problem-solving assistance. For xAI, this performance boost strengthens their position in both enterprise and open-source markets, while putting pressure on established players like OpenAI, Google, and Anthropic to accelerate their development cycles.

The fact that both Grok variants occupy the top positions demonstrates consistency across xAI's model lineup rather than a one-off achievement, which could be crucial for enterprise adoption where reliability matters more than peak performance.

Market Implications

The coding AI space has become increasingly competitive as developers integrate these tools into their daily workflows. Strong benchmark performance often translates directly to market adoption, making these results potentially game-changing for xAI's commercial prospects. While Grok currently leads, the competition remains fierce with Google investing heavily in Gemini's capabilities, OpenAI refining its Codex technology, and DeepSeek showing rapid improvement trajectories.

This benchmark upset could force the entire industry to reassess their development strategies and timelines, potentially accelerating the pace of innovation across all major AI labs as they work to reclaim competitive positioning in this lucrative market segment.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah