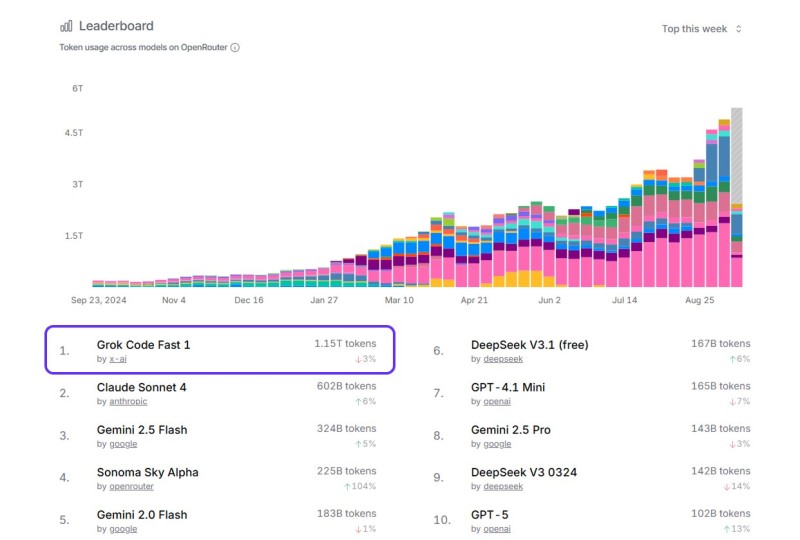

Grok Code didn't just climb to the top of OpenRouter's leaderboard - it demolished the competition and stayed there for 22 straight days. The numbers are staggering. Grok Code Fast 1 processed 1.15 trillion tokens, nearly double Claude Sonnet 4's 602 billion. Google's Gemini trails at 324 billion. That gap signals something fundamental changed in the market.

Why This Matters

AI commentator @amXFreeze spotted what everyone else missed: this isn't just another model launch, it's a complete shift in how developers think about AI tools.

OpenRouter tracks real usage by real developers. When a model processes over a trillion tokens in weeks, developers are betting their projects on it. Most AI models take months to build momentum. Grok Code hit 1 by day three and never looked back.

The Secret Behind Grok's Takeover

Three key factors explain Grok Code's dominance:

- Laser focus on coding - While others tried to do everything, Grok Code mastered writing software that works

- Built for speed - No waiting around when debugging at 2 AM

- Word-of-mouth explosion - When something works this well, developers talk

This wasn't about marketing budgets. Pure performance won over the people who matter most.

Grok Code just rewrote the AI playbook. Specialized models are crushing generalist ones that try to do everything. OpenAI, Anthropic, and Google are now playing catch-up to a model that came out of nowhere.

For developers, this means better tools built specifically for coding rather than generic AI that happens to code okay. The era of "one model fits all" might be over before it began.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah