AMD just landed a serious blow in the AI chip wars. New benchmarks from Signal65 show the MI355X outperforming NVIDIA's flagship B200 across multiple AI inference tasks, with throughput advantages reaching 50% in some scenarios.

Key Performance Metrics:

Market analyst Daniel Romero called it AMD's strongest showing yet against NVIDIA's dominance.

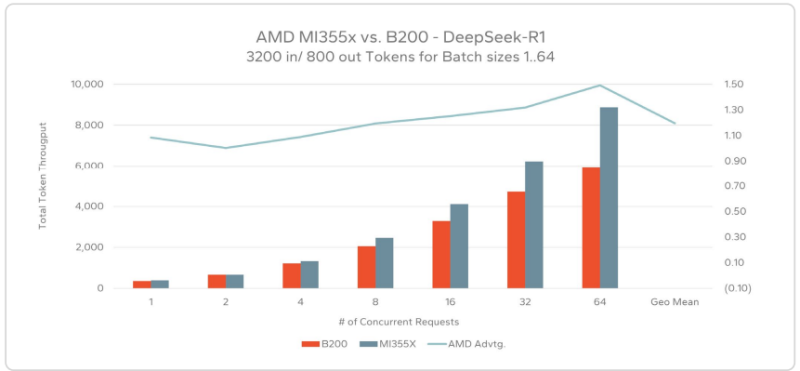

- DeepSeek-R1 inference: 1.0x to 1.5x higher throughput depending on load

- Peak performance: Nearly 9,000 tokens/sec at 64 concurrent requests vs B200's ~6,000

- Llama3.1-405B: Up to 2x NVIDIA's published scores

- MLPerf LoRA fine-tuning: 10% advantage over B200

- Generation leap: 2.93x improvement over AMD's own MI300X

The real story isn't just raw speed - it's how AMD's chip scales under pressure. While NVIDIA's B200 starts to plateau at high concurrency, the MI355X keeps climbing. At 64 concurrent requests, AMD maintains its efficiency edge, suggesting better architecture for enterprise workloads.

What makes this even more impressive is the single-system performance. One 8-GPU MI355X setup completed fine-tuning 9.6% faster than a four-node, 32-GPU MI300X cluster. That's not just better performance - it's simpler deployment and lower operational costs.

For NVIDIA shareholders, this isn't catastrophic, but it's concerning. The B200 still leads in many areas, but AMD is closing gaps fast. If major cloud providers start testing MI355X for their inference workloads, NVIDIA's pricing power could take a hit.

AMD investors should be cautiously optimistic. Strong benchmarks don't automatically translate to sales, especially in a market where NVIDIA's CUDA ecosystem still dominates. But with AI chip demand projected to hit $400B by 2030, even capturing 15-20% market share would be transformative for AMD.

The bigger picture? We're witnessing the end of NVIDIA's near-monopoly in AI acceleration. Real competition is emerging, and that's ultimately good news for everyone building AI systems. The question now is whether AMD can convert these benchmark wins into actual customer wins in 2025.

Usman Salis

Usman Salis

Usman Salis

Usman Salis